You are here

MariaDB Push Replication

Table of Contents

- How to make MariaDB Pull Replication as secure as possible

- MariaDB Push Replication

- Pushing data with the FederatedX Storage Engine and Triggers

- Traffic mirroring with MariaDB MaxScale or ProxySQL

How to make MariaDB Pull Replication as secure as possible

A normal MariaDB Replication is a Pull Replication. This means that a Slave connects to its Master and gathers or better requests Binary Log information from the Master and applies them in a streaming way.

In some set-ups the Slave is located in a less secure network zone and the Master is located in a more secure network zone. So from the security point of view a permanent connection from the less secure zone to the more secure zone is sometimes not acceptable. We had those discussions already 2 times in the last few months with Chief Security Officers (CSO) of our clients.

Arguing for the MariaDB Pull Replication

How can you secure the Master/Slave set-up in this case:- On the Master:

- There has to be a user with the

REPLICATION SLAVEprivilege and no other privileges. This means that this user is only allowed to request Binary Logs and nothing else. - This user can additionally be restricted to the IP address of the Slave machine (e.g.

'replication'@'192.168.1.42'). And thus only from this Slave machine this user can access to its Master. - Additionally firewall rules (

iptables) can further restrict access from source IP (Slave) to destination IP (Master) on Port (3306) and Protocol (TCP) between Master and Slave. So nobody can get anywhere else from the non secure zone. - Only allow SSL connections from the Slave to the Master (

require_secure_transportorREQUIRE SSL). - The use of secure password goes without saying.

- There has to be a user with the

- On the Slave:

- Access only via SSL to the Master (this can be enforced on the Master). So nobody can listen to the Slave → Master → Slave communication. This can be enforced globally or per account. If this is not sufficient the whole set-up can be secured with VPN (stability?).

- If you upgrade Master and Slave on a regular base every 3 months (MariaDB/Oracle CPU) the chances are very small to be hurt by potential security holes.

- With an intrusion detection system and an data integrity tools you can further secure your Slave (and Master) system and detect potential manipulations or attacks.

- Security features like SElinux (Rocky Linux, RedHat and SuSE) and AppArmor (Debian and Ubuntu) additionally can be activated (or better should not be disabled at all!).

- If somebody manages to take over the machine of your Slave in the less secure zone (with O/S user root) you have:

- Done something wrong.

- Lost anyway.

If this arguments are not sufficient to convince your Chief Security Officer (CSO) we have some other ideas how to deal with the problem:

MariaDB Push Replication

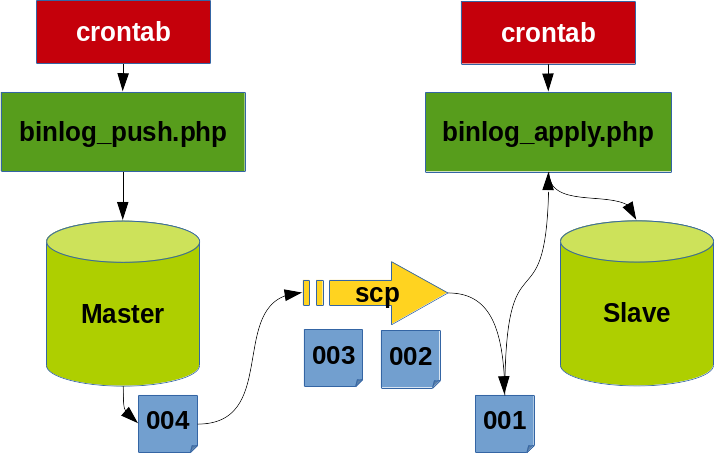

The first idea is that we do a Push Replication from the more secure network zone to the less secure network zone instead of a Pull Replication. But MariaDB does not provide this feature natively. So we have to build it ourself. For a Proof of Concept (PoC) we wrote 2 little programs:

- One for pushing the Binary Logs from the Master to Slave (

binlog_push.php) and - another program for applying the Binary Logs (

binlog_apply.php) on the Slave.

These 2 programs are started every minute via crontab. So we get a pulsating Push Replication with a maximum lag of about 3 minutes. With this rhythm after barely 2 years the 6-digit Binary Log numbers will overflow. But Monty stated that the Binary Log numbers then just become 7-digit long.

We were running this PoC with our new mixed test workload (mixed_test.php) and it looks like this way of Push Replication is working correctly (data on Master and Slave were the same). This mechanism behaves similarly like the normal MariaDB Master/Slave Pull Replication: The push program will throw errors if there is a problem and the apply program will also throw an error and stop if it cannot apply the Binary Log events.

Current limitations are:

- Only one Slave is supported.

- Only full Binary Log Push is supported. Partial Binary Log Push could be implemented.

- GTID based Slave set-up is not considered (or better tested) yet.

The push program on the Master is simply started like this (as alternative to crontab):

shell> watch -n 60 ./binlog_push.php

The Slave is set-up as normal and the Binary Log applier program on the Slaves ist started like this:

shell> mariadb-dump --user=root --master-data=1 --single-transaction --all-databases | mariadb --user=root shell> ./binlog_apply.php --start-logfile=binlog.000001 --start-position=678901234 shell> watch -n 60 ./binlog_apply.php

Pushing data with the FederatedX Storage Engine and Triggers

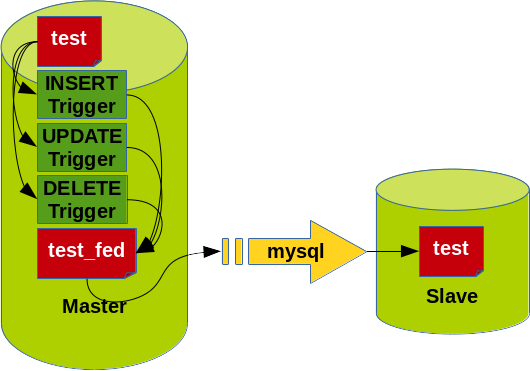

An other possibility to transfer the data from the Master to the Slave is using the FederatedX Storage Engine and Triggers to move the data from the original tables to the FederatedX tables.

This method is a bit less convenient if you want to transfer the data of many or all tables to the Slave. If you want to transfer only a few tables this might work quite well.

Creating the FederatedX tables with federated Server as data source:

SQL> SELECT plugin_name, plugin_version, plugin_maturity FROM information_schema.plugins WHERE plugin_type = 'STORAGE ENGINE' AND plugin_name = 'FEDERATED' ; +-------------+----------------+-----------------+ | plugin_name | plugin_version | plugin_maturity | +-------------+----------------+-----------------+ | FEDERATED | 2.1 | Stable | +-------------+----------------+-----------------+ SQL> INSTALL SONAME 'ha_federatedx';

SQL> CREATE SERVER 'mysql-57' FOREIGN DATA WRAPPER 'mysql' OPTIONS ( HOST '127.0.0.1' , PORT 3320 , SOCKET '' , USER 'app' , PASSWORD 'secret' , DATABASE 'test' ); SQL> SELECT * FROM mysql.servers; +-------------+-----------+------+----------+----------+------+--------+---------+-------+ | Server_name | Host | Db | Username | Password | Port | Socket | Wrapper | Owner | +-------------+-----------+------+----------+----------+------+--------+---------+-------+ | mysql-57 | 127.0.0.1 | test | app | secret | 3320 | | mysql | | +-------------+-----------+------+----------+----------+------+--------+---------+-------+ SQL> CREATE TABLE `test_fed` ( `id` int(10) unsigned NOT NULL AUTO_INCREMENT, `data` varchar(128) DEFAULT NULL, `ts` timestamp NOT NULL DEFAULT CURRENT_TIMESTAMP ON UPDATE CURRENT_TIMESTAMP, PRIMARY KEY (`id`) ) ENGINE="FEDERATED" DEFAULT CHARSET=latin1 CONNECTION='mysql-57' ; ERROR 1434 (HY000): Can't create federated table. Foreign data src error: database: 'test' username: 'app' hostname: '127.0.0.1' SQL> show warnings; +---------+------+-----------------------------------------------------------------------------------------------------------------+ | Level | Code | Message | +---------+------+-----------------------------------------------------------------------------------------------------------------+ | Error | 1434 | Can't create federated table. Foreign data src error: database: 'test' username: 'app' hostname: '127.0.0.1' | | Warning | 1030 | Got error 1 "Operation not permitted" from storage engine FEDERATED | +---------+------+-----------------------------------------------------------------------------------------------------------------+

There reason for this error was just, that the underlying table on the remote system did not exist! But when we found out what was the problem we realized that a SERVER is possibly not the way we want to do it because the tables on Master and Slave must be named the same. Probably it is better to use direct connections because then we can have different table names on Master an Slave.

It would be a nice feature to have some kind of rewrite for tables in the SERVER.

Creating the FederatedX tables with a direct connection:

SQL> CREATE TABLE `test_fed` ( `id` int(10) unsigned NOT NULL AUTO_INCREMENT, `data` varchar(128) DEFAULT NULL, `ts` timestamp NOT NULL DEFAULT CURRENT_TIMESTAMP ON UPDATE CURRENT_TIMESTAMP, PRIMARY KEY (`id`) ) ENGINE="FEDERATED" DEFAULT CHARSET=latin1 CONNECTION='mysql://app:secret@127.0.0.1:3320/test/test' ; SQL> SELECT * FROM test_fed; +----+----------------------------------+---------------------+ | id | data | ts | +----+----------------------------------+---------------------+ | 1 | Test data insert | 2021-01-06 09:27:22 | | 2 | Test data insert | 2021-01-06 09:27:03 | | 3 | Test data insert | 2021-01-06 09:25:37 | +----+----------------------------------+---------------------+

The FederateX variable federated_pushdown does currently not work properly for me and is buggy (MDEV-2453):

SQL> SHOW VARIABLES LIKE '%federat%'; +--------------------+-------+ | Variable_name | Value | +--------------------+-------+ | federated_pushdown | OFF | +--------------------+-------+ SQL> SET GLOBAL federated_pushdown = on; SQL> SELECT id, data FROM test_fed WHERE id = 1; ERROR 1030 (HY000): Got error 10000 "Unknown error 10000" from storage engine FEDERATED

Creating the Triggers to feed the FederatedX tables

Now we have the connection between Master and Slave but somehow the data must come from the main table (test) to the FerderatedX table (test_fed). This will be achieved by Triggers:

SQL> DELIMITER // CREATE TRIGGER test_insert AFTER INSERT ON test FOR EACH ROW INSERT INTO test_fed VALUES (NEW.id, NEW.data, NEW.ts) ; // CREATE TRIGGER test_update AFTER UPDATE ON test FOR EACH ROW UPDATE test_fed SET id = NEW.id, data = NEW.data, ts = NEW.ts WHERE id = OLD.id ; // CREATE TRIGGER test_delete AFTER DELETE ON test FOR EACH ROW DELETE FROM test_fed WHERE id = OLD.id ; // DELIMITER ; SQL> SELECT CONCAT(trigger_schema, '.', trigger_name) AS 'trigger', event_manipulation AS event, CONCAT(event_object_schema, '.', event_object_table) AS 'table', action_timing AS timing FROM information_schema.triggers; +------------------+--------+-----------+--------+ | trigger | event | table | timing | +------------------+--------+-----------+--------+ | test.test_insert | INSERT | test.test | AFTER | | test.test_update | UPDATE | test.test | AFTER | | test.test_delete | DELETE | test.test | AFTER | +------------------+--------+-----------+--------+

After running our mixed_test.php again the comparison of the data was fine. Number and content was the same.

Literature

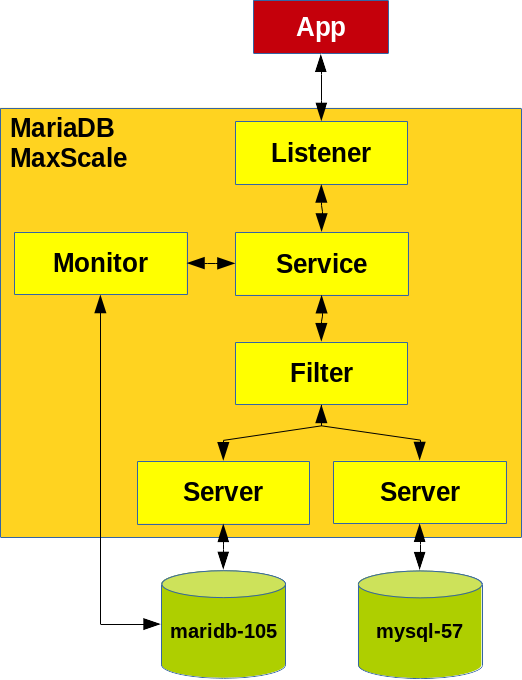

Traffic mirroring with MariaDB MaxScale or ProxySQL

The last idea, which comes to my mind, is mirroring the traffic with a Proxy for example MariaDB MaxScale or ProxySQL as described in the article: Traffic mirroring with MariaDB MaxScale.

The disadvantage here is, at least under high pace, that we loose some information (statements) on the tee'd instance. At least for MariaDB MaxScale. It was further suggested to use the Mirror Router instead of the Tee Filter. If this also happens with ProxySQL we cannot say yet. So this method is possibly not ideal for reliably pushing data from a Master to a Slave right now.

There is an open Bug which is currently under investigation: Tee filter loses statements if branch target is slower.

Literature

- Shinguz's blog

- Log in or register to post comments