You are here

MySQL HA (high availability) Cluster cookbook

In the following article I have summarized some steps and hints to set-up a MySQL active/passive failover Cluster also sometimes called MySQL HA.

With such a set-up you can achieve a 99.99% (4x9) HA set-up for MySQL (52 minutes downtime per year). The same procedure also works for PostgreSQL, Oracle and other database systems running on Linux (DRBD runs on Linux only).

The concept

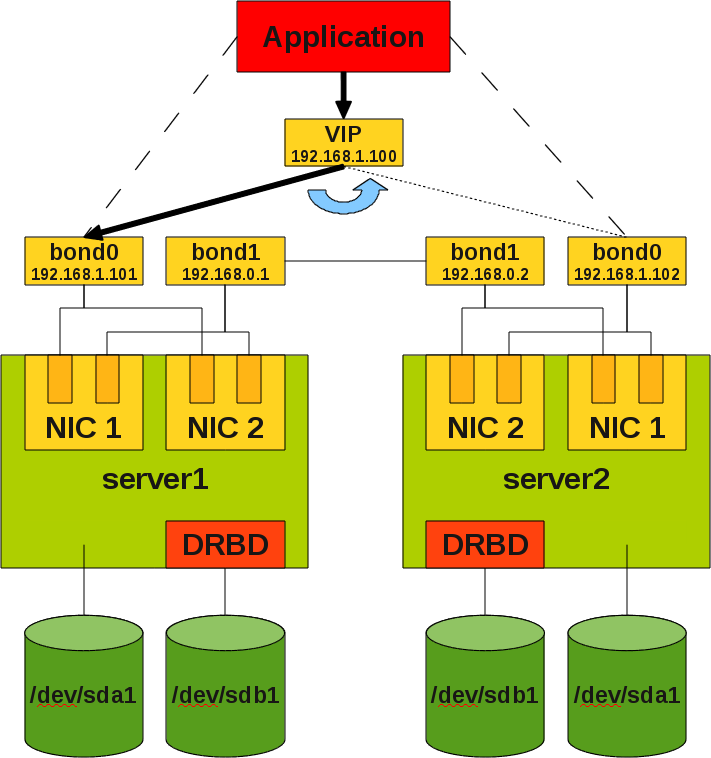

The concept of an active/passive failover Cluster is the following:

- You have 2 servers (also called nodes).

- They communicate over a cluster software (Heartbeat, Corosync, OpenAIS, Red Hat Cluster Suite).

- They are running on DRBD or have a shared storage (SAN, NAS) connected to both nodes.

- MySQL is only running on ONE node (active), the other node does nothing (passive).

- You reach MySQL over a Virtual IP (VIP)

- In case of a problem the cluster software should detect those and failover the resources including the VIP to the passive node. There your database should continue working a few minutes (from < 1 Min up to > 60 Min) later.

- There should be no need to reconfigure or change anything in your application.

Comment about the used tools and their versions

The following description bases on MySQL 5.1 (the database version does not really matter here). It was set-up on CentOS 5.5 but other Linux distributions and versions should work similarly.

Further we used DRBD v8.3.8 and Heartbeat v3.0.3. We configured Heartbeat to use the version 1 mode because of 3 reasons:

- We got troubles with Corosync, Pacemaker and Heartbeat (node was shutdown without any obvious reason because of wrong return codes of the underlying Heartbeat scripts) and we found at least one bug in Corosync.

- Pacemaker/Corosync is IMHO more difficult and, at least for me, less transparent than the old Heartbeat version 1 mode. IMHO it is an overkill for a simple active/passive failover Cluster.

- Configuration files are human readable and pretty simple.

I am aware, that with this opinion I am antiquated! The modern way to do it is Pacemaker/Corosync. We will investigate about this solution later again...

Before you start

Before you start I recommend you to do a little sketch with all relevant components on it:

Hardware preconditions

For testing purposes we were using 2 virtual machines on a VirtualBox from Oracle. In practices you should avoid virtual systems because of performance reasons. Further with Enterprise VM servers (as for example VMWare ESX server) you have such failover capabilities already implemented on VM level. So IMHO it does not make sense to have failover capabilities on two layers...

An other approach for getting High Availability when you do not have an ESX server you can find here: MySQL on VMware Workstation/DRBD vs. VMWare ESX Server/SAN.

Typically it is a good idea for HA reasons to have 2 network cards with 2 ports each so we can bind 1 port of each network card together to a bond (make sure that you bind 2 ports of different cards, not of the same card, otherwise it will not make much sense).

If you have 2 independent power supplies for each node it will not hurt.

On the disk system ideally you have a RAID-1 or RAID-10 for redundancy. When you have a SAN or similar it could make sense to attach the SAN with 2 paths to each server (multipathing, this is not discussed further here).

More redundancy typically means more availability but also more complexity.

So for our set-up we have 2 (virtual) machines with 4 network ports each and a local attach storage with 2 devices (partitions, disks). One device for DRBD and one device for all the other stuff...

Network and Operating System settings

Typically it makes sense to work as user root for setting-up the cluster software. The following steps have to be taken on both nodes.

Before you begin it is a good idea to do an update of the operating system and all installed packages. You can avoid some troubles which are already known and fixed:

# yum update # yum upgrade

At least for testing purposes you should disable the firewall between the cluster nodes and between the ping nodes. Clusters are communicating over various ports to different locations so firewalls between cluster nodes just disturbs in the beginning.

If you are more advanced you can think about having useful firewall settings around your cluster.

To check, stop and disable firewall use the following command:

# iptables -L # service iptables stop # chkconfig iptables off

On CentOS it looks like SELinux is installed and enabled by default. This just disturbs for testing and thus we disable it:

# sestatus -v # setenforce 0

To make it persistent after a reboot we also have to set it in the configuration file:

# # /etc/selinux/config # ... SELINUX=disabled ...

To keep things simple we use short network names:

# # /etc/sysconfig/network # ... HOSTNAME=server1

And for ease of use we give the servers meaningful names:

# # /etc/hosts # ... 192.168.1.101 server1.fromdual.com server1 192.168.1.102 server2.fromdual.com server2

To make the change visible for the system (without a reboot) you have also to set it manually:

# hostname server1

When we refer to a server name it should match the following command:

# uname -n server1

Time synchronization NTP

It is important that all nodes in a cluster have the same and correct time. In case of troubles this makes searching for errors much easier.

To have a correct time on your server install a NTP client:

# yum install ntp # chkconfig ntpd on # ntpdate pool.ntp.org # /etc/init.d/ntpd start

Network - Bonding

Before setting up anything else it makes sense to set-up the bonding stuff first. Then you can test if the bonding works and then forget about it for the later steps.

In our set-up we have 2 servers with 2 network cards and 2 ports each. So we bind port 0 from network card 1 with port 0 from network card 2 to bond0 and port 1 from network card 1 with port 1 from network card 2 to bond1 (see sketch above).

In our set-up we decided to use the bond0 for external communication and bond1 for the internal node to node communication.

To configure a bond manually you can use the following commands:

# modprobe bonding mode=1 miimon=100 # ifconfig eth0 down # ifconfig eth2 down # ifconfig bond0 192.168.1.101 up # ifenslave bond0 eth0 # ifenslave bond0 eth2

On my virtual machine I got a kernel panic when I used the wrong interfaces! :) So be careful! But this should not happen on real boxes. Maybe I just did something wrong with my VM configuration...

To check if bonding works correctly the following commands can help:

# cat /sys/class/net/bond0/bonding/slaves eth0 eth2

and

# cat /proc/net/bonding/bond0 Ethernet Channel Bonding Driver: v3.4.0 (October 7, 2008) Bonding Mode: fault-tolerance (active-backup) Primary Slave: None Currently Active Slave: eth0 MII Status: up MII Polling Interval (ms): 100 Up Delay (ms): 0 Down Delay (ms): 0 Slave Interface: eth0 MII Status: up Link Failure Count: 0 Permanent HW addr: 08:00:27:ca:2d:f1 Slave Interface: eth2 MII Status: up Link Failure Count: 0 Permanent HW addr: 08:00:27:fb:75:48

and

# ifconfig

bond0 Link encap:Ethernet HWaddr 08:00:27:CA:2D:F1

inet addr:192.168.1.101 Bcast:192.168.1.255 Mask:255.255.255.0

inet6 addr: fe80::a00:27ff:feca:2df1/64 Scope:Link

UP BROADCAST RUNNING MASTER MULTICAST MTU:1500 Metric:1

RX packets:41 errors:0 dropped:0 overruns:0 frame:0

TX packets:70 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:0

RX bytes:10453 (10.2 KiB) TX bytes:14463 (14.1 KiB)

eth0 Link encap:Ethernet HWaddr 08:00:27:CA:2D:F1

UP BROADCAST RUNNING SLAVE MULTICAST MTU:1500 Metric:1

RX packets:20 errors:0 dropped:0 overruns:0 frame:0

TX packets:42 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:1000

RX bytes:5069 (4.9 KiB) TX bytes:8746 (8.5 KiB)

eth2 Link encap:Ethernet HWaddr 08:00:27:CA:2D:F1

UP BROADCAST RUNNING SLAVE MULTICAST MTU:1500 Metric:1

RX packets:21 errors:0 dropped:0 overruns:0 frame:0

TX packets:28 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:1000

RX bytes:5384 (5.2 KiB) TX bytes:5717 (5.5 KiB)

To destroy the bonding again you can use the following commands:

# ifenslave -d bond0 eth0 # ifenslave -d bond0 eth2 # ifconfig bond0 down # ifconfig eth0 192.168.1.101 up # ifconfig eth2 192.168.1.103 up

To make the bond permanent you have to change the following configuration files:

# # /etc/sysconfig/network-scripts/ifcfg-bond0 # DEVICE=bond0 BOOTPROTO=static ONBOOT=yes NETWORK=192.168.1.0 NETMASK=255.255.255.0 IPADDR=192.168.1.101 USERCTL=no BONDING_OPTS="mode=active-backup miimon=100" GATEWAY=192.168.1.1

# # /etc/sysconfig/network-scripts/ifcfg-eth[02] # DEVICE=eth0 BOOTPROTO=none ONBOOT=yes HWADDR=08:00:27:ca:2d:f1 MASTER=bond0 SLAVE=yes USERCTL=no

# # /etc/modprobe.conf # ... alias bond0 bonding

After the network restart the bond should show up:

# service network restart

Testing

To make sure the actual bonding works we typically use ping on the IP address and unplug the cables.

Literature

Installing DRBD

Installing DRBD 8.3 works straight forward. On both machines:

# yum install drbd83 kmod-drbd83 # lsmod | grep drbd

If the module is not loaded automatically you can load it with the following command:

# modprobe drbd

Then prepare your device/partition:

# fdisk -l # fdisk /dev/sdb h for help p for print the partition table n for new partition p for primary partition 1 number of partition <CR><CR> w for write partition table

Then we can start configuring DRBD. The configuration of DRBD is done in the following file:

#

# /etc/drbd.conf

#

global {

usage-count no;

}

common {

protocol C;

syncer {

rate 100M;

al-extents 1801;

}

startup {

degr-wfc-timeout 0;

}

disk {

on-io-error detach;

}

net {

after-sb-0pri disconnect;

after-sb-1pri disconnect;

after-sb-2pri disconnect;

rr-conflict disconnect;

}

}

resource drbd_r1 {

handlers {

pri-on-incon-degr "echo 0 > /proc/sysrq-trigger ; halt -f";

pri-lost-after-sb "echo 0 > /proc/sysrq-trigger ; halt -f";

local-io-error "echo 0 > /proc/sysrq-trigger ; halt -f";

}

on server1 {

device /dev/drbd0;

disk /dev/sdb1;

meta-disk internal;

address 192.168.0.1:7789;

}

on server2 {

device /dev/drbd0;

disk /dev/sdb1;

meta-disk internal;

address 192.168.0.2:7789;

}

}

To avoid problems make sure, the configuration file is equal on both nodes. Then on both nodes you have to run the following commands:

# drbdadm create-md drbd_r1 # drbdadm up drbd_r1

drbdadm up includes the following steps:

drbdadm attach drbd_r1drbdadm syncer drbd_r1drbdadm connect drbd_r1

With the following command you can see what DRBD does:

# watch -d -n 1 "cat /proc/drbd"

You should get a value of: Inconsistent/Inconsistent.

Then we do an initial device synchronization on one node:

# drbdadm -- --overwrite-data-of-peer primary drbd_r1

Now we should get a value of Secondary/Secondary with cat /proc/drbd

To make one DRBD node primary (with MySQL databases we should only have Primary/Secondary roles, never Primariy/Primary otherwise you destroy your InnoDB data files) run the following command:

# drbdadm primary drbd_r1

Then we can format and mount the device:

# mkfs.ext3 /dev/drbd0 # mount /dev/drbd0 /data/mysql

Do not add the device to the fstab. This resource will be controlled by Heartbeat later. If you add it to the fstab this will cause some conflicts during reboot of the server.

To manually failover the DRBD device you should proceed as follows on the node where the DRBD device is mounted and/or Primary:

# umount /data/mysql # drbdadm secondary drbd_r1

Then on the other node:

# drbdadm primary drbd_r1 # mount /dev/drbd0 /data/mysql

This will be later automatized with the cluster suite (Heartbeat).

Literature

Installing MySQL

Installing MySQL is straight forward. Just use your preferred MySQL installation method:

# yum install mysql-server

I personally prefer to install MySQL on each server and do not place the MySQL binaries on a DRBD device. This has the advantage that you can upgrade the passive node first and then do a failover and later on upgrade the other node.

If you put the MySQL binaries on the DRBD device you have the advantage that you only have to upgrade the binaries once.

To avoid different MySQL configurations on both nodes I prefer to locate the my.cnf on the DRBD device. This has the big advantage that you never have different configurations on both nodes and you will not experience bad surprises after a failover.

To make this possible we only have a very small /etc/my.cnf:

# # /etc/my.cnf # !include /data/mysql/my.cnf

Then we install our database on the mounted drbd device:

# mysql_install_db --datadir=/data/mysql --user=mysql

Ideally you clean up the already installed /var/lib/mysql to avoid confusions.

Then we create our my.cnf:

# # /data/mysql/my.cnf # [client] port = 3306 socket = /var/lib/mysql/mysql.sock [mysqld] port = 3306 socket = /var/lib/mysql/mysql.sock datadir = /data/mysql user = mysql memlock = 1 table_open_cache = 3072 table_definition_cache = 1024 max_heap_table_size = 64M tmp_table_size = 64M # Connections max_connections = 505 max_user_connections = 500 max_allowed_packet = 16M thread_cache_size = 32 # Buffers sort_buffer_size = 8M join_buffer_size = 8M read_buffer_size = 2M read_rnd_buffer_size = 16M # Query Cache query_cache_size = 64M # InnoDB default_storage_engine = InnoDB innodb_buffer_pool_size = 1G innodb_data_file_path = ibdata1:2G:autoextend innodb_log_file_size = 128M innodb_log_files_in_group = 2 # MyISAM myisam_recover = backup,force # Logging log_warnings = 2 log_error = /data/mysql/error.log slow_query_log = 1 slow_query_log_file = slow.log long_query_time = 0.5 log_queries_not_using_indexes = 1 min_examined_row_limit = 20 # Binary Log / Replication server_id = 1 log-bin = mysql-bin binlog_cache_size = 1M sync_binlog = 8 binlog_format = row expire_logs_days = 7 max_binlog_size = 128M [mysqldump] quick max_allowed_packet = 16M [mysql] no_auto_rehash [myisamchk] key_buffer = 512M sort_buffer_size = 512M read_buffer = 8M write_buffer = 8M [mysqld_safe] open-files-limit = 8192 log-error = /data/mysql/error.log

Because MySQL will be controlled by the Cluster Software (Heartbeat) we have to disable the automated start/stop mechanism on both nodes:

# chkconfig --list mysqld # chkconfig mysqld off

Now we can try a manual failover including MySQL. Start with the active node:

# /etc/init.d/mysql stop # umount /data/mysql # drbdadm secondary drbd_r1

Then on the other node:

# drbdadm primary drbd_r1 # mount /dev/drbd0 /data/mysql # /etc/init.d/mysql start

Heartbeat

As stated above Heartbeat is not a contemporary tool any more. Nevertheless it is quite easy to configure, straight forward and has human readable configuration files.

To install Heartbeat use:

# yum install heartbeat heartbeat.x86_64

You can find some sample configurations under /usr/share/doc/heartbeat-<version>

We configure the Heartbeat in the old (v1) style. XML is not a format made for humans and old style Heartbeat configurations are easily human readable:

There are 3 files located under /etc/ha.d/

# # /etc/ha.d/ha.cf # node server1 node server2 auto_failback off # You should have 2 of them! bcast bond1 bond0 logfacility local0 logfile /var/log/ha.log debugfile /var/log/ha.debug keepalive 500ms warntime 5 deadtime 10 initdead 120 # Mgmt console as external reference ping 192.168.1.1 respawn hacluster /usr/lib64/heartbeat/ipfail apiauth ipfail gid=haclient uid=hacluster deadping 15 mcast bond1 225.0.0.1 694 2 0 mcast bond0 225.0.0.2 694 1 0 respawn hacluster /usr/lib64/heartbeat/dopd apiauth dopd gid=haclient uid=hacluster crm off

# # /etc/ha.d/authkeys # auth 1 1 sha1 FromDual

#

# /etc/ha.d/haresources

#

server1 drbddisk::drbd_r1 \

Filesystem::/dev/drbd0::/data/mysql::ext3 \

Filesystem::/dev/mpath/mpath2p1::/data/backup::ext3 \

mysql \

IPaddr::192.168.1.100

# IPaddr::192.168.1.100 \

# mon \

# Crontab::mysql::/data/mysql/crontab.txt

And finally the authkeys file should be secured:

# chmod 600 /etc/ha.d/authkeys

Under normal circumstances all 3 files should be the same on both nodes of our Cluster. To ensure this you can either edit them on one node and distribute them with the following command to the other node:

# /usr/share/heartbeat/ha_propagate

or if you prefer to configure them on both nodes independently I usually use this method to compare them:

# md5sum ha.cf authkeys haresources

Now stop all resources and start Heartbeat on both nodes at the same time (more or less):

/etc/init.d/heartbeat start

You should see MySQL, DRBD and the VIP started on server1. Try to access you MySQL database from remote through the VIP.

remote# mysql --user=test --host=192.168.1.100 --port=3306 --password

If this works we try a manual failover. On the passive (standby) node run the following command:

# /usr/share/heartbeat/hb_takeover

Follow all the steps in the syslog on both nodes:

# tail -f /var/log/messages

If everything is OK all the resources should be move now to server2.

If you run into error or warning messages try to find out what it is and fix it. One thing we found is that the start/stop script of mysqld in CentOS 5.5 seems not to work with Heartbeat. After we changed to following line in the script it worked fine for us!

# diff /etc/init.d/mysqld.orig /etc/init.d/mysqld 115c115 < ret=1 --- > ret=0

Testing

When your MySQL HA Cluster works properly without any error message and you manage to failover for- and backwards it is time to test all possible different scenarios which can go wrong:

- Disk failures (just unplug the disks)

- Multipathing failover if you have such.

- Stopping nodes hard (power off)

- Restarting a server (init 6)

- Unmounting file system

- Try to kill DRBD

- Killing MySQL

- Stopping VIP

- Stopping Heartbeat

- Bonding failover (unplug one cable)

- Split brain (disconnect the interconnect cables simultaneously)

- etc.

Theoretically all those cases should be handled by your set-up properly.

Monitoring

When you stop/kill MySQL or the VIP you will notice that no failover happens. Heartbeat will not notice this "failure" and will not act accordingly.

If you want to failover in such a case is to discuss and depends on your personal flavour. We typically in such a situation set-up a monitoring solution on the active node which just stops Heartbeat and triggers a failover if MySQL is not reachable any more over the VIP.

The philosophy behind this decision is that it is worse for the customer when he cannot work for 15 or 30 minutes until we found the problem and restarted MySQL or the VIP. So we trigger a failover an hope MySQL comes up properly on the other side again. In most of the cases this should be the case.

If not it is still the right time after the failover to figure out what went wrong. We stop Heartbeat to guarantee that no failback will take place without human intervention. Just do not forget to restart Heartbeat on this node after you found what was the problem.

In our set-ups we typically use mon to monitor the MySQL service. Mon is configured as follows:

#

# /etc/mon/mon.cf

#

monerrfile = /var/log/mon.log

mondir = /usr/lib64/mon/mon.d

alertdir = /usr/lib64/mon/alert.d

maxprocs = 20

histlength = 100

randstart = 2s

hostgroup check 192.168.1.100

watch check

service mysql

interval 5s

monitor mysql.monitor --maxfail=3 --user=check_db --password=check_db \

--database=check_db --host=192.168.1.100

period wd {Mon-Sun} hr {0am-11pm}

alertafter 3

alertevery 15m

alert mail.alert contact@fromdual.com

alert stop-heartbeat.alert

alert file.alert /var/log/ping_check.log

All those events should be monitored and immediately reported to the DBA/System Administrator. So he can investigate immediately what has happened and fix the problem to avoid a later complete system outage.

To monitor DRBD we have added a module to our MySQL Performance Monitor for MySQL. When DRBD is in the wrong state an alert can be risen.

It is further a good idea to regularly check a cluster failover. I recommend once every 3 month. If you trust your cluster set-up that is 15 minutes of work off your peak hour. If you do NOT trust your cluster set-up you should not use it at all.

Trouble shooting

If you once manage to run into a Split Brain situation with DRBD find out which is the side you want to continue with and then do on one side where status is cs:StandAlone ro:Primary/Unknown:

# drbdadm connect drbd_r1

and on the other side:

# drbdadm down drbd_r1 # drbdadm attach drbd_r1 # drbdadm -- --discard-my-data connect drbd_r1

Be careful: This destroys your data on the DRBD node you run this command! Ideally you do a backup of both nodes beforehand.

And now have fun setting up your MySQL HA set-up...

Literature

- DRBD product page

- DRBD documentation

- The DRBD User's Guide

- MySQL - High Availability and Scalability

- The Linux-HA User’s Guide

- MySQL HA with DRBD and Heartbeat on CentOS 5.5

- Sample Configuration of DRBD On CentOS 4.5

- CentOS – Install and Configure DRBD

- CentOS HowTo HA-DRBD

- HA cluster with DRBD and Heartbeat

- Shinguz's blog

- Log in or register to post comments

Comments

Ein paar Anmerkungen:

replication vs HA setup

pros vs cons of using replication rather than the drbd method

Other node cannot even respond to read only queries?

MYSQL Installation

unknown variable 'table_open_cache=3072'

Help with MySQL and HeartBeat

VIP

CentOS 6 and bonding

/etc/modprobe.confhas been deprecated in CentOS 6, the process of bonding network interfaces has changed a bit. Now instead of defining your bond in your/etc/modprobe.conf, you define it in/etc/modprobe.d/bonding.conf[ 1 ].