You are here

Replication channel failover with Galera Cluster for MySQL

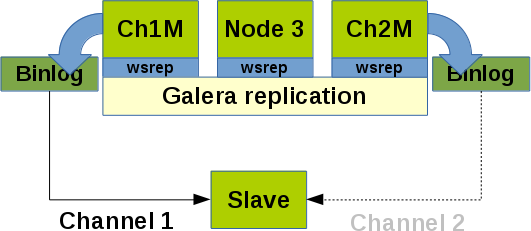

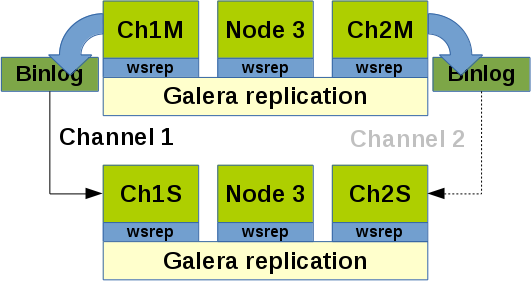

Sometimes it could be desirable to replicate from a Galera Cluster to a single MySQL slave or to an other Galera Cluster. Reasons for this measure could be:

- An unstable network between two Galera Cluster locations.

- A separation of a reporting slave and the Galera Cluster so that heavy reports on the slave do not affect the Galera Cluster performance.

- Mixing different sources in a slave or a Galera Cluster (fan-in replication).

This article is based on earlier research work (see MySQL Cluster - Cluster circular replication with 2 replication channels) and uses the old MySQL replication style (without MySQL GTID).

Preconditions

- Enable the binary logs on 2 nodes of a Galera Cluster (we call them channel masters) with the

log_binvariable. - Set

log_slave_updates = 1on ALL Galera nodes. - It is recommended to have small binary logs and relay logs in such a situation to reduce overhead of scanning the files (

max_binlog_size = 100M).

Scenarios

Let us assume that for some reason the actual channel master of channel 1 breaks. As a consequence the slave of channel 1 does not receive any replication events any more. But we have to keep the replication stream up and running. So we have to switch the replication channel to channel master 2.

Switching replication channel

First for security reasons we should stop the slave of replication channel 1 first:

mysql> STOP SLAVE;

Then we have to find the actual relay log on the slave:

mysql> pager grep Relay_Log_File mysql> SHOW SLAVE STATUS\G mysql> nopager Relay_Log_File: slave-relay-bin.000019

Next we have to find the last applied transaction on the slave:

mysql> SHOW RELAYLOG EVENTS IN 'slave-relay-bin.000019'; | slave-relay-bin.000019 | 3386717 | Query | 5201 | 53745015 | BEGIN | | slave-relay-bin.000019 | 3386794 | Table_map | 5201 | 53745067 | table_id: 72 (test.test) | | slave-relay-bin.000019 | 3386846 | Write_rows | 5201 | 53745142 | table_id: 72 flags: STMT_END_F | | slave-relay-bin.000019 | 3386921 | Xid | 5201 | 53745173 | COMMIT /* xid=1457451 */ | +------------------------+---------+-------------+-----------+-------------+--------------------------------+

This is transaction 1457451 which is the same on all Galera nodes.

On the new channel master of channel 2 we have to find now the matching binary log. This can be done best by matching times between the relay log and the binary log of master of channel 2 (consider different time zones and that server times are synced with ntpd

On slave:

shell> ll *relay-bin* -rw-rw---- 1 mysql mysql 336 Mai 22 20:32 slave-relay-bin.000018 -rw-rw---- 1 mysql mysql 3387029 Mai 22 20:37 slave-relay-bin.000019

On master of channel 2:

shell> ll *bin-log* -rw-rw---- 1 mysql mysql 2518737 Mai 22 19:57 bin-log.000072 -rw-rw---- 1 mysql mysql 143 Mai 22 19:57 bin-log.000073 -rw-rw---- 1 mysql mysql 165 Mai 22 20:01 bin-log.000074 -rw-rw---- 1 mysql mysql 62953648 Mai 22 20:40 bin-log.000075

It looks like binary log 75 of master 2 matches to relay log of our slave.

Now we have to find the same transaction on the master of channel 2:

mysql> pager grep -B 6 1457451 mysql> SHOW BINLOG EVENTS IN 'bin-log.000075'; mysql> nopager | bin-log.000075 | 53744832 | Write_rows | 5201 | 53744907 | table_id: 72 flags: STMT_END_F | | bin-log.000075 | 53744907 | Xid | 5201 | 53744938 | COMMIT /* xid=1457450 */ | | bin-log.000075 | 53744938 | Query | 5201 | 53745015 | BEGIN | | bin-log.000075 | 53745015 | Table_map | 5201 | 53745067 | table_id: 72 (test.test) | | bin-log.000075 | 53745067 | Write_rows | 5201 | 53745142 | table_id: 72 flags: STMT_END_F | | bin-log.000075 | 53745142 | Xid | 5201 | 53745173 | COMMIT /* xid=1457451 */ | +----------------+----------+-------------+-----------+-------------+---------------------------------------+

We successfully found the transaction and want the position of the next transaction 53745173 where we should continue replicating.

As a last step we have to set the slave to the master of replication channel 2:

mysql> CHANGE MASTER TO master_host='master2', master_port=3306, master_log_file='bin-log.000075', master_log_pos=53745173; mysql> START SLAVE;

After a while the slave has caught up and is ready for the next failover back.

Discussion

We found during our experiments that an IST of a channel master does not lead to a gap or loss of events in the replication stream. So restarting a channel master does not require a channel failover as long as an IST can be used for resyncing the channel master with the Galera Cluster.

The increase of wsrep_cluster_conf_id is NOT an indication that a channel failover is required.

A SST resets the binary logs so after the SST a slave will not replicate any more. So using this method should be safe to use. If you find any situation where you experience troubles with channel failover please let us know.

- Shinguz's blog

- Log in or register to post comments

Comments

MySQL 5.5 and 5.6 ?!

Just to confirm, the above channel failover steps are valid in Galera Cluster for both MySQL versions 5.5 and 5.6.

Enjoy!!